Users impacted and the importance of “Context”

Clearly errors or defects impact software quality but all errors are not equal when it comes to prioritizing them for attention. And without classifying them effectively how do you know you are working on the most important errors first?

We talked about how a single Quality Index score is derived from five measures in this order of importance, Error Types, Users Impacted, Error Frequency, Context Impacted, and Number of Issues. Today we’ll focus on two fundamental factors that impact our Quality Index Score, Users Impacted and Context Impacted.

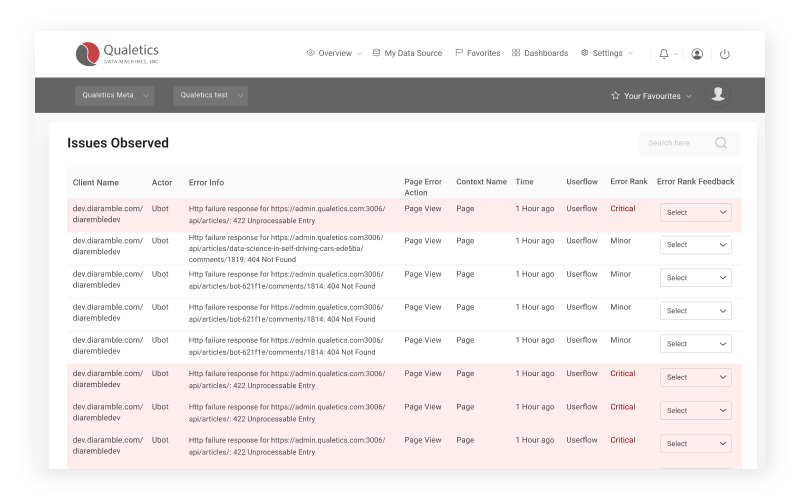

Let’s start with Users Impacted, a fairly straightforward metric. Because our plug-ins ingest every click a user makes for analysis, we can identify who is taking what actions and we can associate those actions with errors when they occur. We analyze that activity and trap errors that occur either through our automated detection features or through error identifiers our customers can set when installing our plug-ins. We then associate the errors with the number of users impacted. And the more users an error impacts the more attention that error may deserve. That seems pretty straightforward.

Let’s start with Users Impacted, a fairly straightforward metric. Because our plug-ins ingest every click a user makes for analysis, we can identify who is taking what actions and we can associate those actions with errors when they occur. We analyze that activity and trap errors that occur either through our automated detection features or through error identifiers our customers can set when installing our plug-ins. We then associate the errors with the number of users impacted. And the more users an error impacts the more attention that error may deserve. That seems pretty straightforward.

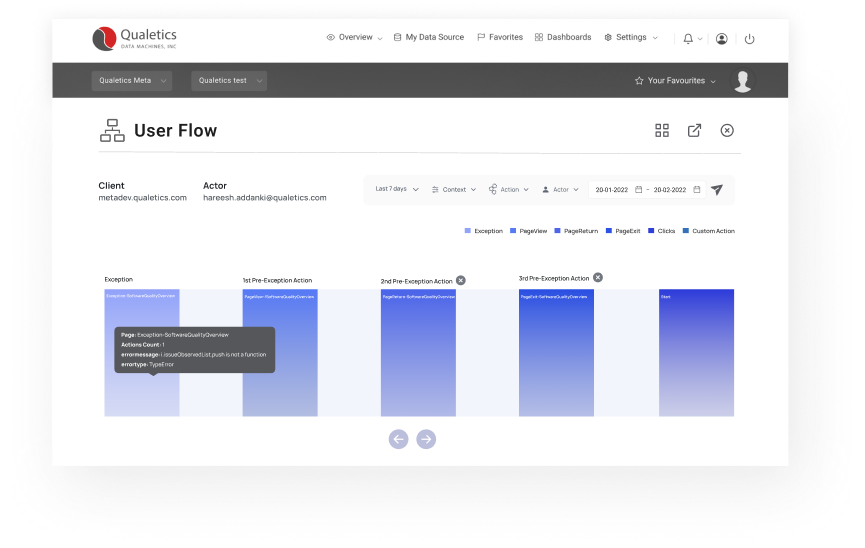

But as we said, all errors are not equal. Some errors may be annoying but may not interrupt the most important facets of the user experience. For instance, let’s consider if this were an eCommerce-focused mobile app’ and we identified two errors, one that impacted the checkout process for every iOS user, and another that interrupted the feature allowing a user to preview the cost of an express shipping option. The error classification algorithm would detect the importance of the checkout context as critical and the shipping lookup context as “minor”. This particular AI algorithm applies machine learning to learn the context of aspects of each software experience’s user flow to classify errors and offers our customers a feedback loop that can adjust the program’s learning to improve the classification results.

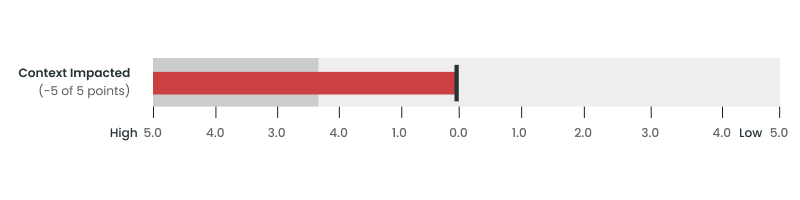

The image below shows the Context Impacted component of the Quality Index and in this customer example there was only one context impacted and it was a relatively insignificant part of the user experience.

With Users Impacted and Context Impacted objectively measured we have captured two of the five quality measures that make up our Quality Index giving our customers an objective measure of either positive or negative progress answering the question “How is our software doing?”.

Our upcoming posts will describe how Error Types, Error Frequency, and Number of Issues round out our Quality Index.

Let us know what you think in the comments and please like or share this post if you find it interesting!

Please refer to our earlier blog in this software intelligence series for more information.