A Reformed Idealist’s AI Prediction for 2020

Reformed idealist you ask? Being an idealist can make for more interesting company so it wasn’t easy to admit evolving to embrace my pragmatic side. Consistent with my newfound pragmatism I’ll start with an observation then dive into my AI prediction for 2020 and why I’ve arrived at this forecast.

AI is new enough to many businesses and has potential that is so irresistible, their approach to AI can seem like cats chasing lasers. Cats chase the red dots because it stimulates their natural prey drive, they instinctively have to respond even though they don’t know what they’ll do if they catch it. They’ll figure that out later.

But the age of AI being treated like a shiny new object is passing. There’s less patience around figuring out what to do with it and 2020 has evolved to become the year organizations will expect AI to generate business results. The difficult climate all businesses find themselves in today requires a focus on results, increased revenue where it can be generated, and cost containment wherever possible. Add to this that business people have been hearing about the virtues of “big data” for years now, saying things like “We used to see ourselves as a _____ company but recognize our future relies as much on us being a data company.” Remember when Amazon just sold books? It’s high-time more AI solutions have been adopted across enterprises.

There is no denying the allure of applying AI algorithms that seem to magically convert data into visualizations that illuminate insights hidden in plain site. But delivering on their true potential relies on the less glamorous, more difficult task of operationalizing AI solutions at scale throughout an enterprise, something few organizations have mastered.

A common scenario plays out for organizations as they explore AI. They’ll hire a data scientist or two or fund a consulting engagement in the hope of developing a solution that drives meaningful results for their business. Through hard work and collaboration they’ll refine an algorithm and develop impactful visualizations resulting in the approval of a pilot group of managers who escalate it to executives who enthusiastically approve deploying the solution. At this point, the feeling of success is similar to that of capturing that laser dot. The pilot’s impact is promising but not tangible; it’s not a scaled solution yet. This is where the project stalls for lack of an actionable plan that anticipates and addresses the complexity of scaling the AI solution they’ve developed. This begins a long, difficult iterative process of overcoming those complexities and frustrating most people involved in the project.

Moving AI ideas from the drawing board to enterprise-wide impact can benefit greatly from adopting a Data Intelligence as a Service (DIaaS) approach very similar to the Software as a Service (SaaS) model so common today. Below are a couple real-life examples of how deploying AI in the context of a Data intelligence as a Service (DIaaS) platform accelerates operationalizing AI solutions. In both cases solutions were developed and deployed in a matter of days and weeks, not months and years. The first is a municipality focused on better management of their public transportation system. The second is a market intelligence solution with an innovative approach to monitoring consumer sentiment for grocery products.

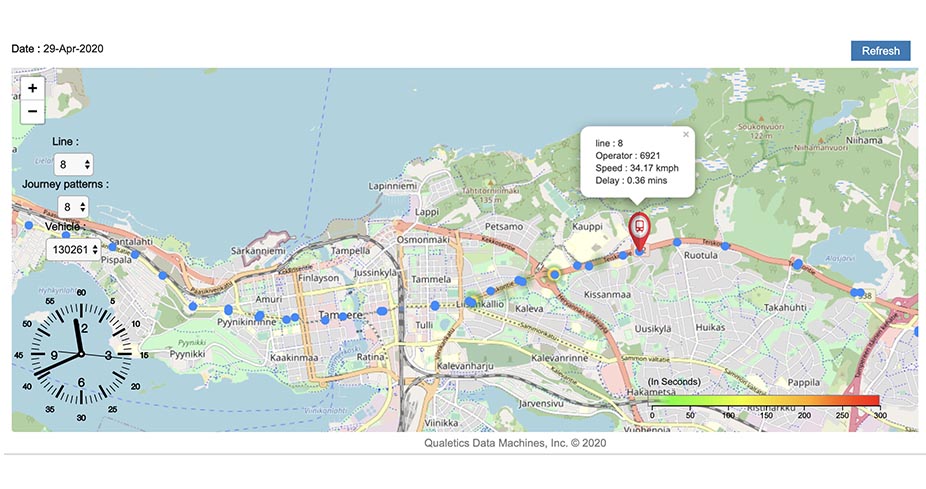

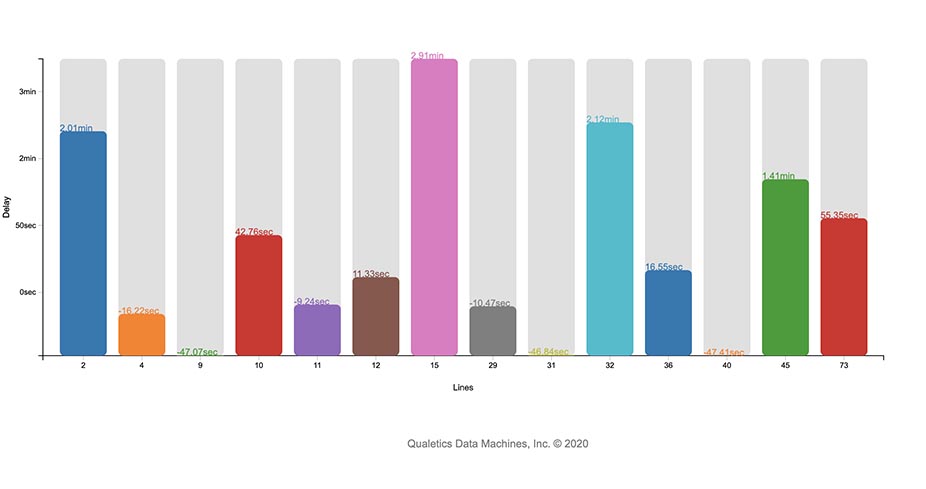

The municipality manages a public transportation system supported by 364 buses, running 57 routes across 1643 stop points every day. Their buses contain IoT sensors capable of streaming real-time location and mechanical data. In addition to tracking route-specific progress (see illustration) their solution supports monitoring timely route, driver, and bus performance among other data across their overall system.

The implementation challenges included ingesting, storing, and processing continuously streamed data transmitted from the IoT sensors active on the buses. User-based provisioning features in the DIaaS platform serve managers with the data and visualizations aligned with their functional responsibilities, and storage capacity and report filters support tracking the depth and breadth of data historically to support a broad range of historical reporting and accurate trending to project future requirements and improve planning. The transportation portal was built and deployed in about 8 weeks with new analytics and visualizations being added since.

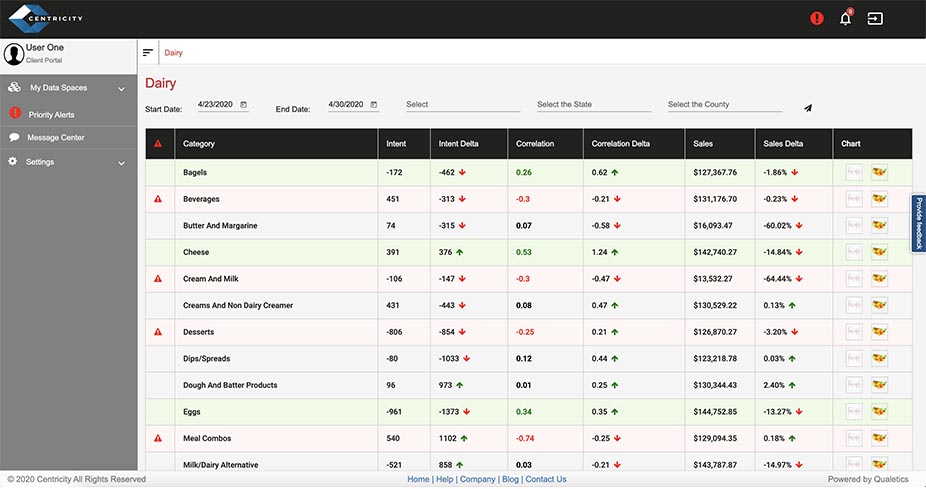

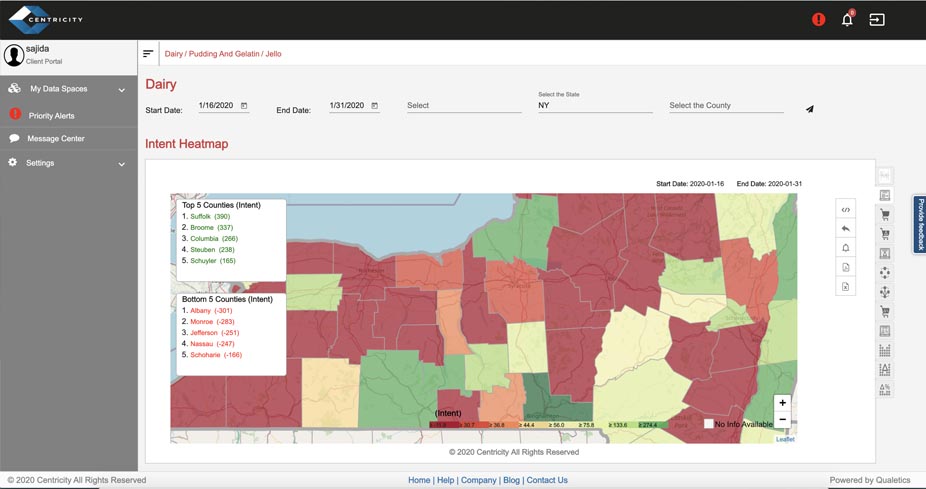

Our second example is an innovative new customer intelligence company named Centricity serving the consumer packaged goods and grocery market. What is so innovative about them is where analytics in that market has always focused on historic sales performance to gauge market size and market share, they concentrate on where market demand is going next. They accomplish this by analyzing over 850 million product-specific data points on consumer sentiment every day giving their customers forward-looking insights. This is a market analysis approach never before available in that market but it makes so much sense. Wouldn’t you rather drive your car facing forward rather than facing backward!

As obvious as their approach may seem it hasn’t been attempted before because of significant obstacles that technology is only now able to overcome. Centricity needed the ability to ingest, annotate, and store huge volumes of consumer sentiment data on a daily basis. They push the envelope even further by correlating their customer’s daily sales data with the sentiment data. Then based on the user profile of the individual logged into the system, typically that’s a product or category manager responsible for a specific grocery category for consumer good, it will present only those product categories or geographies the manager or executive manages so they don’t have to sift through unnecessary detail.

Below is an analysis of the dairy product category showing customer sales data and consumer intent for a broad range of dairy related products and measuring their correlation over a specified time period. The second screen shows how that intent data is translated to a heatmap at the county level indicating where this customer is losing ground in product sales relative to market sentiment.

Just as in the first example the deployment of this customer’s analytics platform was developed in about eight weeks at which point user acceptance testing began with another eight weeks or so of feedback, fixes, and adjustments. In four months user acceptance testing was completed, the platform was available to demonstrate to beta test prospects, and the first round of new enhancements are being developed.

So it’s 2020. If you are struggling to develop or deploy a data analytics, machine learning, or AI solution at some level in your organization think of it in the same context of deploying a SaaS solution. Consider how you’ll accommodate scalability over time, security, user permissioning, the UI, and ongoing support. And if you are looking for ideas or assistance consider Qualetics and our on-demand DIaaS (Data Intelligence as a Service) platform. We can help you solve many of the barriers in scaling AI such as time, resources and infrastructure. To learn how Qualetics works click here.